It’s an uncommon story in many ways, not least of all because it defies many of the Silicon Valley stereotypes we’ve grown accustomed to.

It’s an uncommon story in many ways, not least of all because it defies many of the Silicon Valley stereotypes we’ve grown accustomed to.

The first story, the story of Google Translate, takes place in Mountain View over nine months, and it explains the transformation of machine translation. The second story, the story of Google Brain and its many competitors, takes place in Silicon Valley over five years, and it explains the transformation of that entire community. The third story, the story of deep learning, takes place in a variety of far-flung laboratories — in Scotland, Switzerland, Japan and most of all Canada — over seven decades, and it might very well contribute to the revision of our self-image as first and foremost beings who think.

All three are stories about artificial intelligence. The seven-decade story is about what we might conceivably expect or want from it. The five-year story is about what it might do in the near future. The nine-month story is about what it can do right this minute. These three stories are themselves just proof of concept. All of this is only the beginning.

Part I: Learning Machine

1. The Birth of Brain

Jeff Dean, though his title is senior fellow, is the de facto head of Google Brain. Dean is a sinewy, energy-efficient man with a long, narrow face, deep-set eyes and an earnest, soapbox-derby sort of enthusiasm. The son of a medical anthropologist and a public-health epidemiologist, Dean grew up all over the world — Minnesota, Hawaii, Boston, Arkansas, Geneva, Uganda, Somalia, Atlanta — and, while in high school and college, wrote software used by the World Health Organization. He has been with Google since 1999, as employee 25ish, and has had a hand in the core software systems beneath nearly every significant undertaking since then. A beloved artifact of company culture is Jeff Dean Facts, written in the style of the Chuck Norris Facts meme: “Jeff Dean’s PIN is the last four digits of pi.” “When Alexander Graham Bell invented the telephone, he saw a missed call from Jeff Dean.” “Jeff Dean got promoted to Level 11 in a system where the maximum level is 10.” (This last one is, in fact, true.)

One day in early 2011, Dean walked into one of the Google campus’s “microkitchens” — the “Googley” word for the shared break spaces on most floors of the Mountain View complex’s buildings — and ran into Andrew Ng, a young Stanford computer-science professor who was working for the company as a consultant. Ng told him about Project Marvin, an internal effort (named after the celebrated A.I. pioneer Marvin Minsky) he had recently helped establish to experiment with “neural networks,” pliant digital lattices based loosely on the architecture of the brain. Dean himself had worked on a primitive version of the technology as an undergraduate at the University of Minnesota in 1990, during one of the method’s brief windows of mainstream acceptability. Now, over the previous five years, the number of academics working on neural networks had begun to grow again, from a handful to a few dozen. Ng told Dean that Project Marvin, which was being underwritten by Google’s secretive X lab, had already achieved some promising results.

Dean was intrigued enough to lend his “20 percent” — the portion of work hours every Google employee is expected to contribute to programs outside his or her core job — to the project. Pretty soon, he suggested to Ng that they bring in another colleague with a neuroscience background, Greg Corrado. (In graduate school, Corrado was taught briefly about the technology, but strictly as a historical curiosity. “It was good I was paying attention in class that day,” he joked to me.) In late spring they brought in one of Ng’s best graduate students, Quoc Le, as the project’s first intern. By then, a number of the Google engineers had taken to referring to Project Marvin by another name: Google Brain.

Since the term “artificial intelligence” was first coined, at a kind of constitutional convention of the mind at Dartmouth in the summer of 1956, a majority of researchers have long thought the best approach to creating A.I. would be to write a very big, comprehensive program that laid out both the rules of logical reasoning and sufficient knowledge of the world. If you wanted to translate from English to Japanese, for example, you would program into the computer all of the grammatical rules of English, and then the entirety of definitions contained in the Oxford English Dictionary, and then all of the grammatical rules of Japanese, as well as all of the words in the Japanese dictionary, and only after all of that feed it a sentence in a source language and ask it to tabulate a corresponding sentence in the target language. You would give the machine a language map that was, as Borges would have had it, the size of the territory. This perspective is usually called “symbolic A.I.” — because its definition of cognition is based on symbolic logic — or, disparagingly, “good old-fashioned A.I.”

There are two main problems with the old-fashioned approach. The first is that it’s awfully time-consuming on the human end. The second is that it only really works in domains where rules and definitions are very clear: in mathematics, for example, or chess. Translation, however, is an example of a field where this approach fails horribly, because words cannot be reduced to their dictionary definitions, and because languages tend to have as many exceptions as they have rules. More often than not, a system like this is liable to translate “minister of agriculture” as “priest of farming.” Still, for math and chess it worked great, and the proponents of symbolic A.I. took it for granted that no activities signaled “general intelligence” better than math and chess.

There were, however, limits to what this system could do. In the 1980s, a robotics researcher at Carnegie Mellon pointed out that it was easy to get computers to do adult things but nearly impossible to get them to do things a 1-year-old could do, like hold a ball or identify a cat. By the 1990s, despite punishing advancements in computer chess, we still weren’t remotely close to artificial general intelligence.

There has always been another vision for A.I. — a dissenting view — in which the computers would learn from the ground up (from data) rather than from the top down (from rules). This notion dates to the early 1940s, when it occurred to researchers that the best model for flexible automated intelligence was the brain itself. A brain, after all, is just a bunch of widgets, called neurons, that either pass along an electrical charge to their neighbors or don’t. What’s important are less the individual neurons themselves than the manifold connections among them. This structure, in its simplicity, has afforded the brain a wealth of adaptive advantages. The brain can operate in circumstances in which information is poor or missing; it can withstand significant damage without total loss of control; it can store a huge amount of knowledge in a very efficient way; it can isolate distinct patterns but retain the messiness necessary to handle ambiguity.

There was no reason you couldn’t try to mimic this structure in electronic form, and in 1943 it was shown that arrangements of simple artificial neurons could carry out basic logical functions. They could also, at least in theory, learn the way we do. With life experience, depending on a particular person’s trials and errors, the synaptic connections among pairs of neurons get stronger or weaker. An artificial neural network could do something similar, by gradually altering, on a guided trial-and-error basis, the numerical relationships among artificial neurons. It wouldn’t need to be preprogrammed with fixed rules. It would, instead, rewire itself to reflect patterns in the data it absorbed.

This attitude toward artificial intelligence was evolutionary rather than creationist. If you wanted a flexible mechanism, you wanted one that could adapt to its environment. If you wanted something that could adapt, you didn’t want to begin with the indoctrination of the rules of chess. You wanted to begin with very basic abilities — sensory perception and motor control — in the hope that advanced skills would emerge organically. Humans don’t learn to understand language by memorizing dictionaries and grammar books, so why should we possibly expect our computers to do so?

Google Brain was the first major commercial institution to invest in the possibilities embodied by this way of thinking about A.I. Dean, Corrado and Ng began their work as a part-time, collaborative experiment, but they made immediate progress. They took architectural inspiration for their models from recent theoretical outlines — as well as ideas that had been on the shelf since the 1980s and 1990s — and drew upon both the company’s peerless reserves of data and its massive computing infrastructure. They instructed the networks on enormous banks of “labeled” data — speech files with correct transcriptions, for example — and the computers improved their responses to better match reality.

“The portion of evolution in which animals developed eyes was a big development,” Dean told me one day, with customary understatement. We were sitting, as usual, in a whiteboarded meeting room, on which he had drawn a crowded, snaking timeline of Google Brain and its relation to inflection points in the recent history of neural networks. “Now computers have eyes. We can build them around the capabilities that now exist to understand photos. Robots will be drastically transformed. They’ll be able to operate in an unknown environment, on much different problems.” These capacities they were building may have seemed primitive, but their implications were profound.

2. The Unlikely Intern

In its first year or so of existence, Brain’s experiments in the development of a machine with the talents of a 1-year-old had, as Dean said, worked to great effect. Its speech-recognition team swapped out part of their old system for a neural network and encountered, in pretty much one fell swoop, the best quality improvements anyone had seen in 20 years. Their system’s object-recognition abilities improved by an order of magnitude. This was not because Brain’s personnel had generated a sheaf of outrageous new ideas in just a year. It was because Google had finally devoted the resources — in computers and, increasingly, personnel — to fill in outlines that had been around for a long time.

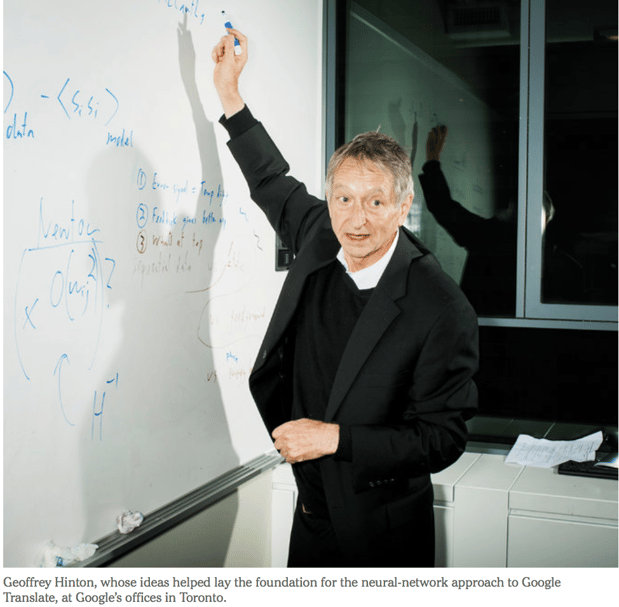

A great preponderance of these extant and neglected notions had been proposed or refined by a peripatetic English polymath named Geoffrey Hinton. In the second year of Brain’s existence, Hinton was recruited to Brain as Andrew Ng left. (Ng now leads the 1,300-person A.I. team at Baidu.) Hinton wanted to leave his post at the University of Toronto for only three months, so for arcane contractual reasons he had to be hired as an intern. At intern training, the orientation leader would say something like, “Type in your LDAP” — a user login — and he would flag a helper to ask, “What’s an LDAP?” All the smart 25-year-olds in attendance, who had only ever known deep learning as the sine qua non of artificial intelligence, snickered: “Who is that old guy? Why doesn’t he get it?”

“At lunchtime,” Hinton said, “someone in the queue yelled: ‘Professor Hinton! I took your course! What are you doing here?’ After that, it was all right.”

A few months later, Hinton and two of his students demonstrated truly astonishing gains in a big image-recognition contest, run by an open-source collective called ImageNet, that asks computers not only to identify a monkey but also to distinguish between spider monkeys and howler monkeys, and among God knows how many different breeds of cat. Google soon approached Hinton and his students with an offer. They accepted. “I thought they were interested in our I.P.,” he said. “Turns out they were interested in us.”

Hinton comes from one of those old British families emblazoned like the Darwins at eccentric angles across the intellectual landscape, where regardless of titular preoccupation a person is expected to make sideline contributions to minor problems in astronomy or fluid dynamics. His great-great-grandfather was George Boole, whose foundational work in symbolic logic underpins the computer; another great-great-grandfather was a celebrated surgeon, his father a venturesome entomologist, his father’s cousin a Los Alamos researcher; the list goes on. He trained at Cambridge and Edinburgh, then taught at Carnegie Mellon before he ended up at Toronto, where he still spends half his time. (His work has long been supported by the largess of the Canadian government.) I visited him in his office at Google there. He has tousled yellowed-pewter hair combed forward in a mature Noel Gallagher style and wore a baggy striped dress shirt that persisted in coming untucked, and oval eyeglasses that slid down to the tip of a prominent nose. He speaks with a driving if shambolic wit, and says things like, “Computers will understand sarcasm before Americans do.”

Hinton had been working on neural networks since his undergraduate days at Cambridge in the late 1960s, and he is seen as the intellectual primogenitor of the contemporary field. For most of that time, whenever he spoke about machine learning, people looked at him as though he were talking about the Ptolemaic spheres or bloodletting by leeches. Neural networks were taken as a disproven folly, largely on the basis of one overhyped project: the Perceptron, an artificial neural network that Frank Rosenblatt, a Cornell psychologist, developed in the late 1950s. The New York Times reported that the machine’s sponsor, the United States Navy, expected it would “be able to walk, talk, see, write, reproduce itself and be conscious of its existence.” It went on to do approximately none of those things. Marvin Minsky, the dean of artificial intelligence in America, had worked on neural networks for his 1954 Princeton thesis, but he’d since grown tired of the inflated claims that Rosenblatt — who was a contemporary at Bronx Science — made for the neural paradigm. (He was also competing for Defense Department funding.) Along with an M.I.T. colleague, Minsky published a book that proved that there were painfully simple problems the Perceptron could never solve.

Minsky’s criticism of the Perceptron extended only to networks of one “layer,” i.e., one layer of artificial neurons between what’s fed to the machine and what you expect from it — and later in life, he expounded ideas very similar to contemporary deep learning. But Hinton already knew at the time that complex tasks could be carried out if you had recourse to multiple layers. The simplest description of a neural network is that it’s a machine that makes classifications or predictions based on its ability to discover patterns in data. With one layer, you could find only simple patterns; with more than one, you could look for patterns of patterns. Take the case of image recognition, which tends to rely on a contraption called a “convolutional neural net.” (These were elaborated in a seminal 1998 paper whose lead author, a Frenchman named Yann LeCun, did his postdoctoral research in Toronto under Hinton and now directs a huge A.I. endeavor at Facebook.) The first layer of the network learns to identify the very basic visual trope of an “edge,” meaning a nothing (an off-pixel) followed by a something (an on-pixel) or vice versa. Each successive layer of the network looks for a pattern in the previous layer. A pattern of edges might be a circle or a rectangle. A pattern of circles or rectangles might be a face. And so on. This more or less parallels the way information is put together in increasingly abstract ways as it travels from the photoreceptors in the retina back and up through the visual cortex. At each conceptual step, detail that isn’t immediately relevant is thrown away. If several edges and circles come together to make a face, you don’t care exactly where the face is found in the visual field; you just care that it’s a face.

A demonstration from 1993 showing an early version of the researcher Yann LeCun's convolutional neural network, which by the late 1990s was processing 10 to 20 percent of all checks in the United States. A similar technology now drives most state-of-the-art image-recognition systems.

The issue with multilayered, “deep” neural networks was that the trial-and-error part got extraordinarily complicated. In a single layer, it’s easy. Imagine that you’re playing with a child. You tell the child, “Pick up the green ball and put it into Box A.” The child picks up a green ball and puts it into Box B. You say, “Try again to put the green ball in Box A.” The child tries Box A. Bravo.

Now imagine you tell the child, “Pick up a green ball, go through the door marked 3 and put the green ball into Box A.” The child takes a red ball, goes through the door marked 2 and puts the red ball into Box B. How do you begin to correct the child? You cannot just repeat your initial instructions, because the child does not know at which point he went wrong. In real life, you might start by holding up the red ball and the green ball and saying, “Red ball, green ball.” The whole point of machine learning, however, is to avoid that kind of explicit mentoring. Hinton and a few others went on to invent a solution (or rather, reinvent an older one) to this layered-error problem, over the halting course of the late 1970s and 1980s, and interest among computer scientists in neural networks was briefly revived. “People got very excited about it,” he said. “But we oversold it.” Computer scientists quickly went back to thinking that people like Hinton were weirdos and mystics.

These ideas remained popular, however, among philosophers and psychologists, who called it “connectionism” or “parallel distributed processing.” “This idea,” Hinton told me, “of a few people keeping a torch burning, it’s a nice myth. It was true within artificial intelligence. But within psychology lots of people believed in the approach but just couldn’t do it.” Neither could Hinton, despite the generosity of the Canadian government. “There just wasn’t enough computer power or enough data. People on our side kept saying, ‘Yeah, but if I had a really big one, it would work.’ It wasn’t a very persuasive argument.”... To be continued.

Check out new digital marketing trend in 2017 by cliking on the video below